Microsoft Azure

Overview

Microsoft Azure is a Cloud Computing Platform that provides a range of services such as Databases, Mobile, Networking, Security, Storage, Web, Windows Virtual Desktop and more.

Connect Lytics to Microsoft Azure Storage, Event Hub, and Azure SQL to import and export data from Lytics. This allows you to leverage Lytics behavioral scores, content affinities, and insights to drive your marketing efforts through Azure.

Authorization

If you haven’t already done so, you will need to setup a Microsoft Azure account before you begin the process described below. If you are new to creating authorizations in Lytics, see the Authorizations documentation for more information.

- Select Microsoft Azure from the list of providers.

- Select the method for authorization. Note that different methods support different job types:

- Enter a Label to identify your authorization.

- (Optional) Enter a Description for further context on your authorization.

- Complete the configuration steps needed for your authorization. These steps will vary by method.

- Click Save Authorization.

Azure Storage Connection String

Use the Microsoft Azure Storage Connection String to import or export files to and from Azure Blob storage. Please refer to Azure's documentation to setup the connection string for the Blob storage, and then follow the steps below to connect Lytics with Azure:

- In the Connection String box, enter the connection string for the Blob storage.

- (Optional) In the PGP Key box, enter a PGP public key to encrypt Azure Blob files.

Azure Event Hub

Use the Microsoft Azure Event Hub authorization method to export Lytics Audiences to Azure Event Hub. You will need the Event Hub Connection String. Once obtained, enter the connection string in the Event Hub Connection String box.

Azure SQL Database

For Azure SQL Database you will need the following credentials. Note that if your Azure SQL server enforces IP firewall rules, you will need to add Lytics production IPs to the allowlist. Please contact your Lytics account manager for a range of IPs.

- In the Server text box, enter your Azure SQL server.

- In the Port text box, enter your Azure SQL server port.

- In the Database text box, enter your Azure SQL database.

- In the User text box, enter your username.

- In the Password password box, enter your password.

Import Data

Importing custom data stored in Azure Blob Storage to Lytics allows you to leverage Insights provided by Lytics data science to drive your marketing efforts.

Integration Details

- Implementation Type: Server-side Integration.

- Type: REST API Integration.

- Frequency: One-time or scheduled Batch Integration (can be hourly, daily, weekly, or monthly depending on configuration).

- Resulting Data: Raw Event Data or, if custom mapping is applied, new User Profiles or existing profiles with new User Fields.

This integration uses the Storage Service API to read the CSV file stored in Azure Container. Each run of the job will proceed as follows:

- Finds the file selected during configuration using Blob Storage service of Azure.

- If found, reads the contents of the file.

- If configured to diff the files, it will compare the file to the data imported from the previous run.

- Filters fields based on what was selected during configuration.

- Sends event fields to the configured stream.

- Schedule the next run of the import if it is a scheduled batch.

Fields

Fields imported via CSV through Azure Storage Service will require custom data mapping.

Configuration

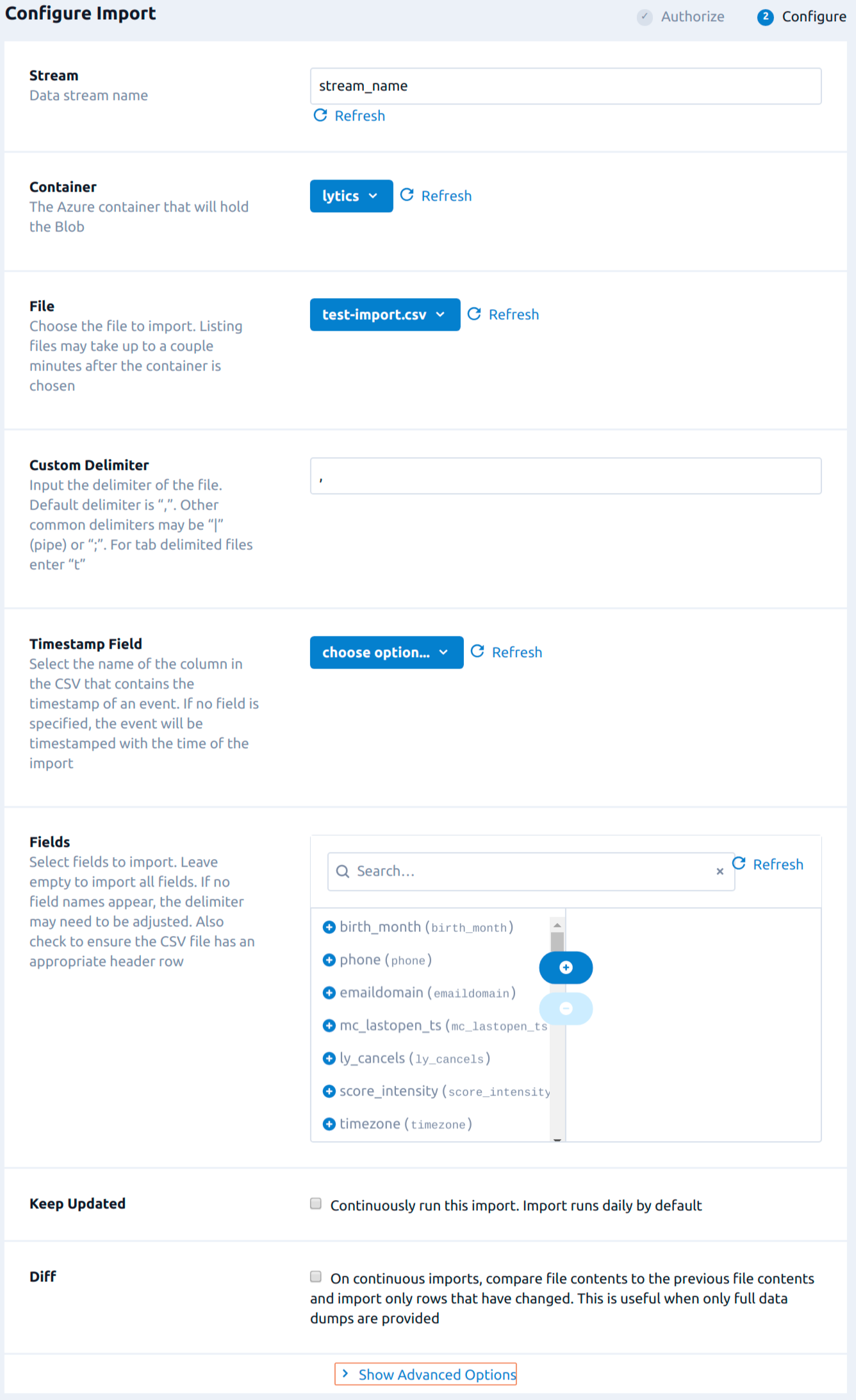

Follow these steps to set up and configure a CSV Import from Azure Storage in the Lytics platform. If you are new to creating jobs in Lytics, see the Data Sources documentation for more information.

- Select Microsoft Azure from the list of providers.

- Select the Import Data job type from the list.

- Select the Authorization you would like to use or create a new one.

- Enter a Label to identify this job you are creating in Lytics.

- (Optional) Enter a Description for further context on your job.

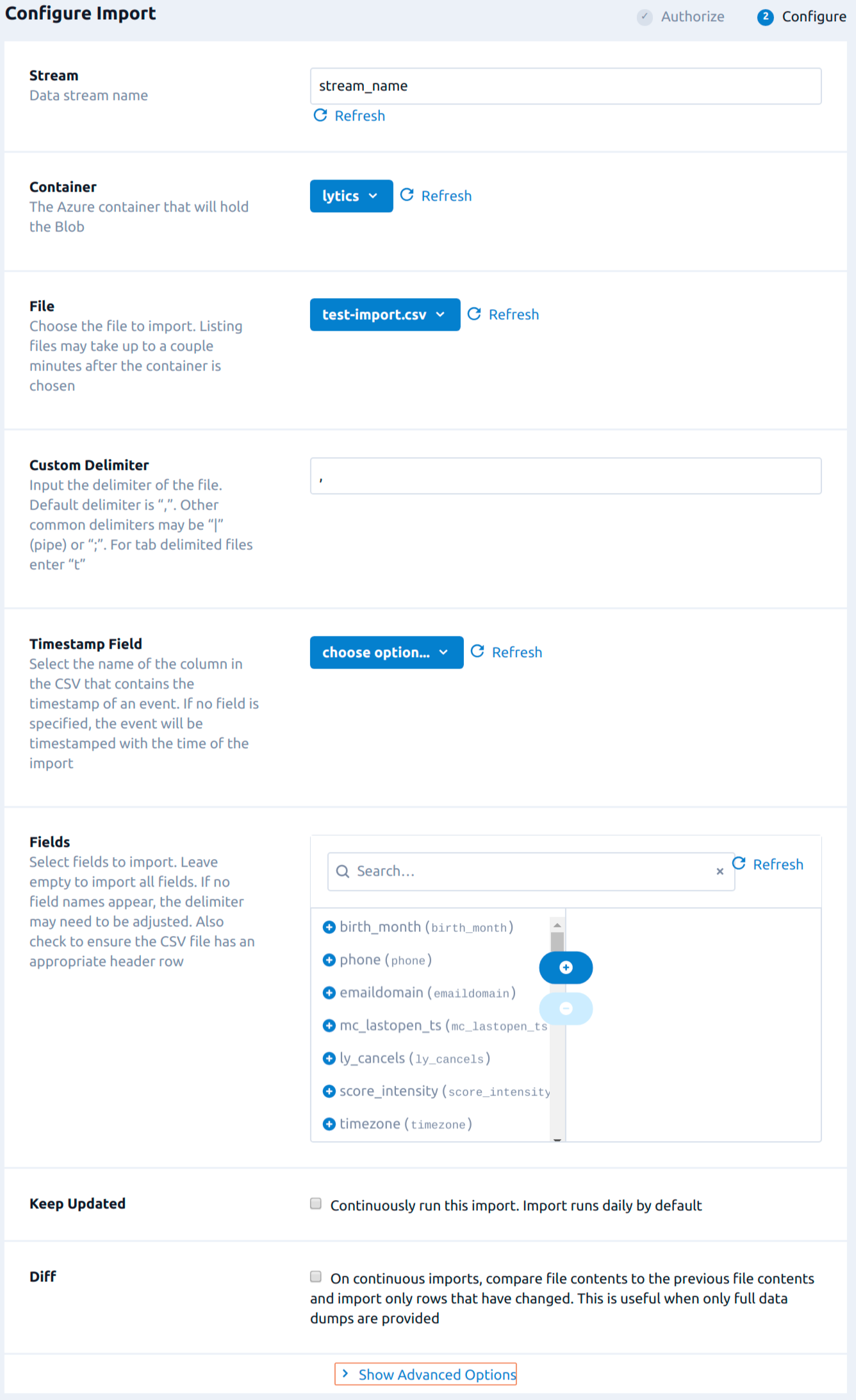

- From the Stream box, enter or select the data stream you want to import the file(s) into.

- From the Container drop-down, select the Azure container with the file you want to import.

- In the File drop-down, select the file to import. Listing files may take up to a couple minutes after the bucket is chosen.

- (Optional) In the Custom Delimiter box, enter the delimiter used. For tab delimited files enter "t". Only the first character is used (e.g. if "abcd" was entered, only "a" would be used as a delimiter).

- (Optional) From the Timestamp Field drop-down, select the name of the column in the CSV that contains the timestamp of an event. If no field is specified, the event will be time stamped with the time of the import.

- (Optional) Use the Fields input to select the fields to import. Leave empty to import all fields. If no field names appear, the Custom Delimiter may need to be adjusted. Also check to ensure the CSV file has an appropriate header row.

- (Optional) Select the Keep Updated checkbox to run the import on a regular basis.

- (Optional) Select the Diff checkbox on repeating imports to compare file contents to the previous file contents and import only rows that have changed. This is useful when full data dumps are provided.

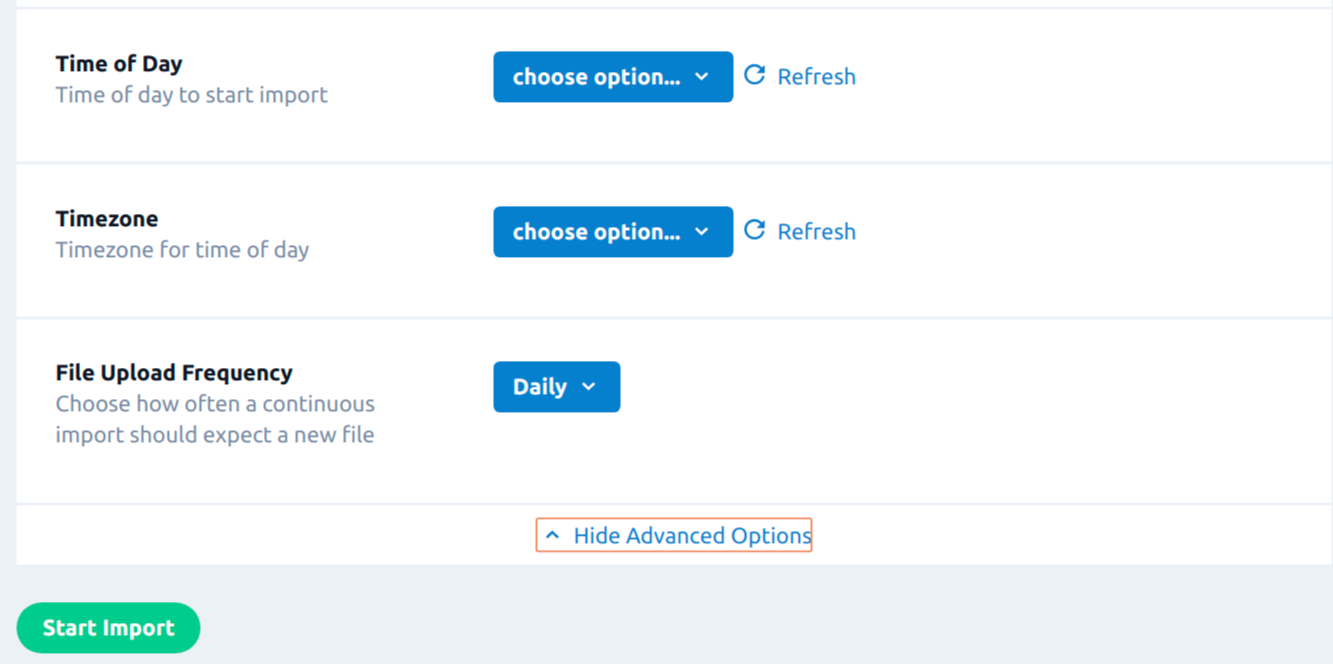

- Click on the Show Advanced Options tab to view additional Configuration options.

- (Optional) In the Prefix text box, enter the file name prefix. You may use regular expressions for pattern matching. The prefix must match the file name up to the timestamp. A precalculated prefix derived from the selected file will be available as a dropdown.

- (Optional) From the Time of Day drop-down, select the time of day for the import to be scheduled after the first import. This only applies to the daily, weekly, and monthly import frequencies. If no option is selected the import will be scheduled based on the completion time of the last import.

- (Optional) From the Timezone drop-down, select the time zone for the Time of Day.

- (Optional) From the File Upload Frequency drop-down, select the frequency to run the import.

- Click Start Import.

NOTE: For continuous imports, files should be in the following format: timestamp.csv. The job will understand the sequence of files based on the timestamp. If no next file is received, the continuous import will stop and a new export will need to be configured.

Import SQL Table

Azure SQL Database is an intelligent, scalable, relational database service built for the cloud. By connecting Lytics directly to your Azure SQL instance, you can easily import full tables of audience and activity data to leverage Lytics' segmentation and insights.

Integration Details

- Implementation Type: Server-side Integration.

- Implementation Technique: Lytics SQL driver

- Frequency: Batch Integration

- Resulting data: User Profiles, User Fields, Raw Event Data.

This integration ingests data from your Azure SQL Database by directly querying the table selected during configuration. Once started, the job will:

-

Run a query to select and order the rows that have yet to be imported. If the import is continuous, the job will save the timestamp of the last row seen, and only the most recent data will be imported during future runs. To ensure consistent ordering of records and to improve performance for batch ingestion, the result of this query will be written to a Local Temporary Table of the format

#TMP_{UUID}_{TIMESTAMP}, whereUUIDis a unique identifier associated with the job andTIMESTAMPis the unix timestamp marking the start of the current run, in nanoseconds. This table is only visible in the current session, and is automatically dropped when the batch ingestion completes. For more information, see the Temporary Tables section here. -

Once the initial query completes, the result set will be imported from the temporary table in batches, starting from the oldest row.

-

Once the last row is imported, if the job is configured to run continuously, it will sleep until the next run. The time between runs can be selected during configuration.

Fields

Fields imported through Azure SQL will require custom data mapping.

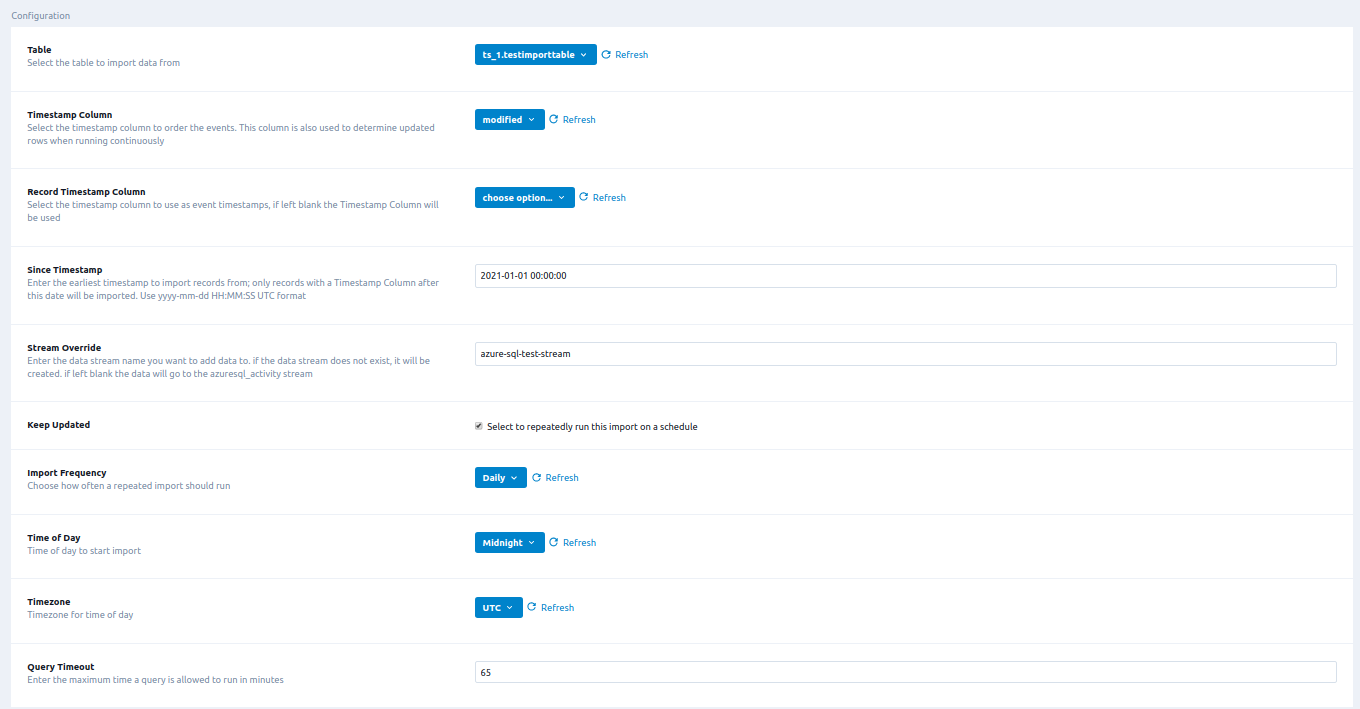

Configuration

Follow these steps to set up and configure an Azure SQL import job for Microsoft Azure in the Lytics platform. If you are new to creating jobs in Lytics, see the Data Sources documentation for more information.

- Select Microsoft Azure from the list of providers.

- Select Azure SQL Import from the list.

- Select the Authorization you would like to use or create a new one.

- Enter a Label to identify this job you are creating in Lytics.

- (Optional) Enter a Description for further context on your job.

- Complete the configuration steps for your job.

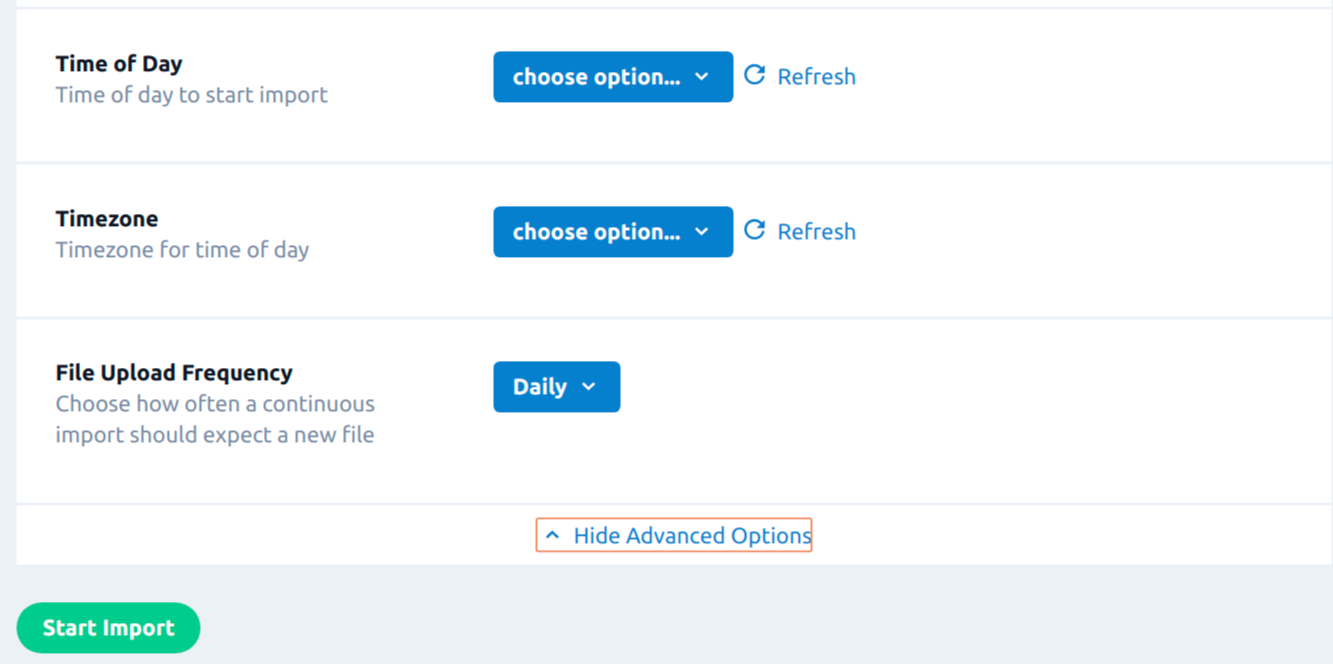

- From the Table input, select the table to import data from.

- From the Timestamp Column input, select the timestamp column to order the events. This column is also used to determine updated rows when running continuously.

- (Optional) From the Record Timestamp Column input, select the timestamp column to use as event timestamps, if left blank the Timestamp Column will be used.

- (Optional) In the Since Timestamp text box, enter the earliest timestamp to import records from; only records with a Timestamp Column after this date will be imported. Use

yyyy-mm-ddHH:MM:SSUTC format. - (Optional) In the Stream Override text box, enter the data stream name you want to add data to. If the data stream does not exist, it will be created. If left blank the data will go to the

azuresql_activitystream. - (Optional) Select the Keep Updated checkbox to repeatedly run this import on a schedule.

- (Optional) From the Import Frequency input, choose how often a repeated import should run.

- (Optional) From the Time of Day input, select time of day to start import.

- (Optional) From the Timezone input, select timezone for time of day.

- (Optional) In the Query Timeout numeric field, enter the maximum time a query is allowed to run in minutes.

- Click Start Import.

Export Audiences (Blob Storage) JSON

Export your Lytics audiences to Azure Blob Storage as newline JSON files, which can then be imported into other systems for improved segmentation and targeting.

Integration Details

- Implementation Type: Server-side Integration.

- Type: REST API Integration.

- Frequency: Batch Integration one-time or continuous update.

- Resulting Data: User Profile data written to a JSON format.

This integration uses the Azure Blob Service REST API to upload data to Azure Blob Storage. Each run of the job will proceed as follows:

- Create Blob in the Container selected during configuration.

- Scan through the users in the selected audience. Filter user fields based on the export configuration and write to the created Blob.

- If the job is configured to be continuously updated, any users that enter the audience will be exported. Select the One-time Export checkbox if you want to run it only once.

Fields

The fields exported to the Blob will depend on the User Fields option in the job configuration described below. Any user field in your Lytics account may be available for export.

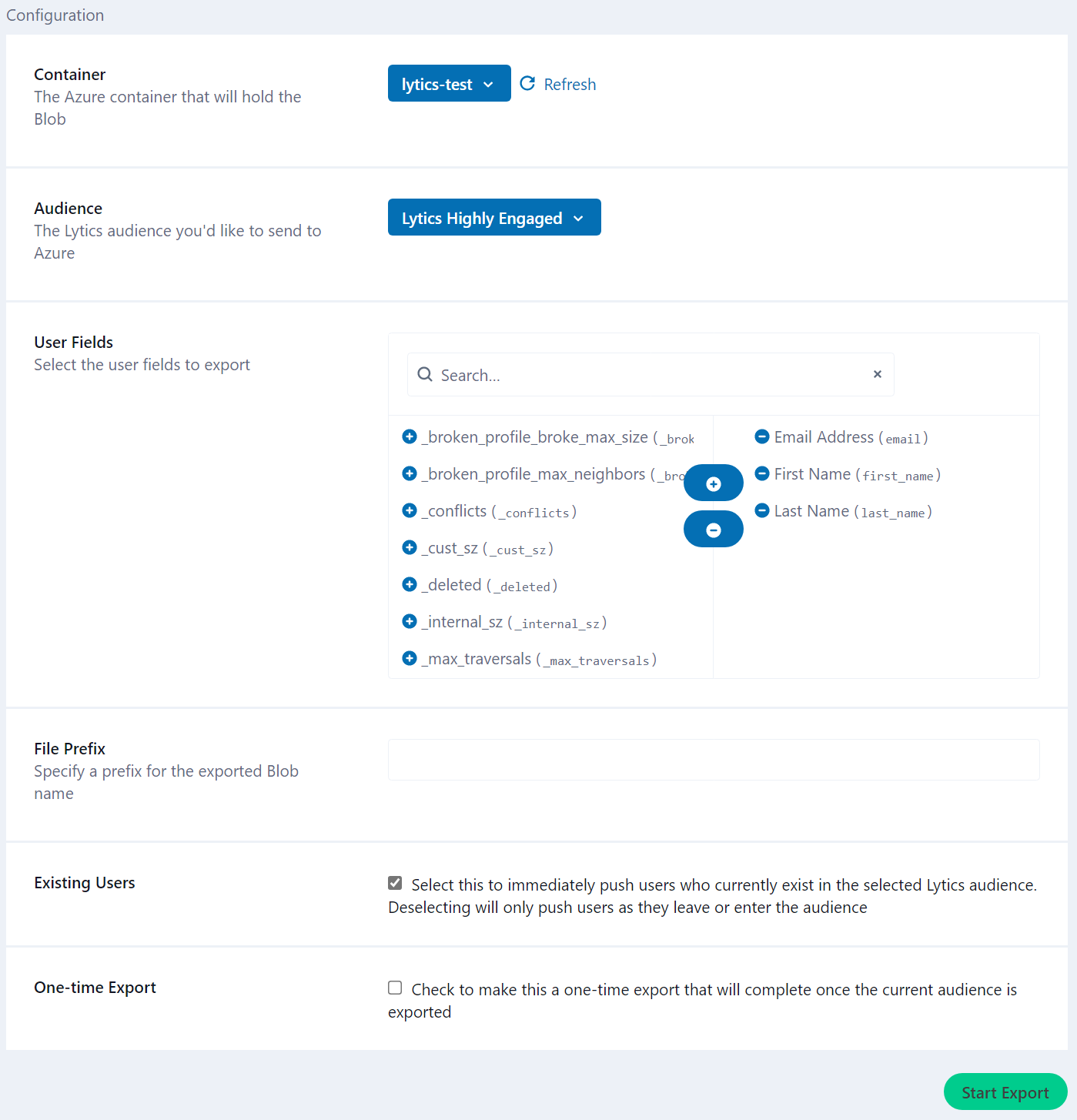

Configuration

Follow these steps to set up and configure an export of user profiles to Azure Blob Storage in the Lytics platform. If you are new to creating jobs in Lytics, see the Destinations documentation for more information.

- Select Microsoft Azure from the list of providers.

- Select the export job type from the list.

- Select the Authorization you would like to use or create a new one.

- Enter a Label to identify this job you are creating in Lytics.

- (Optional) Enter a Description for further context on your job.

- Select the audience to export.

- Complete the configuration steps for your job.

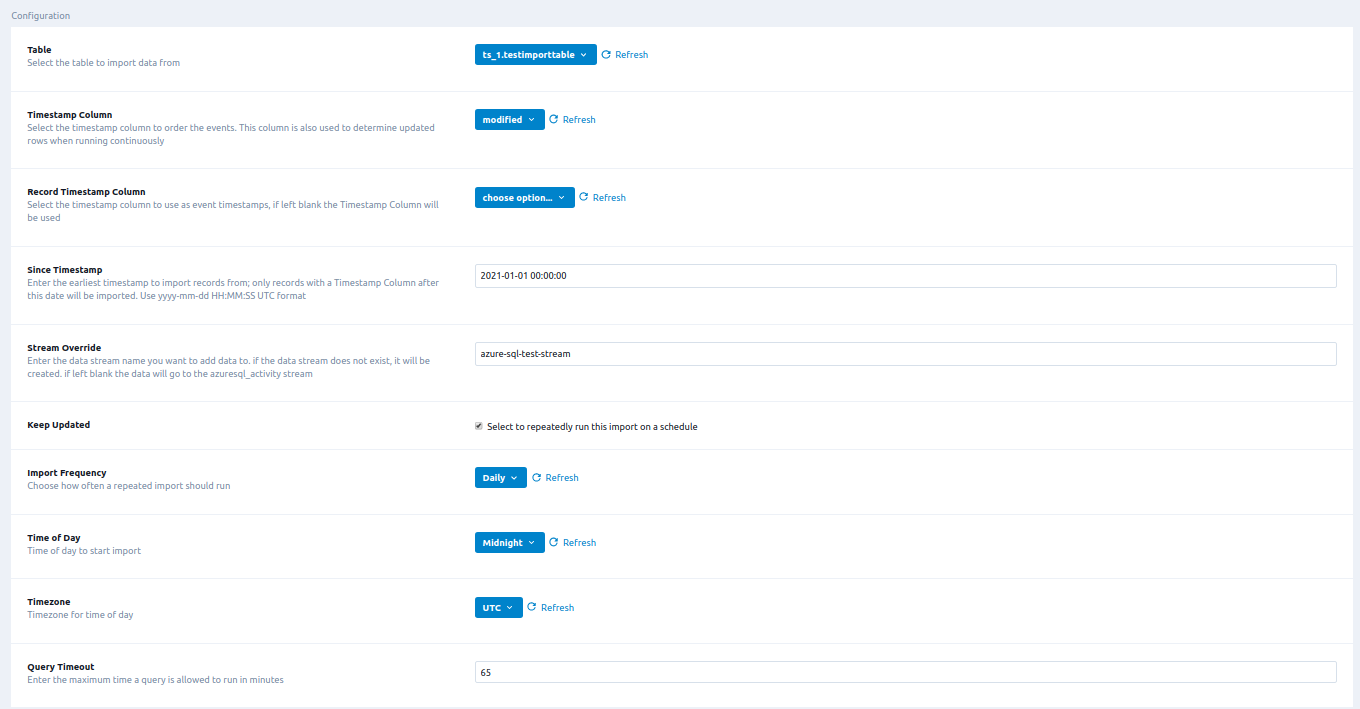

- From the Container input, select the Azure container that will hold the Blob.

- (optional) From the User Fields input, select the user fields to export.

- (optional) In the File Prefix text box, enter a prefix for the exported Blob name.

- (optional) Select the Existing Users checkbox, to immediately push users who currently exist in the selected Lytics audience. De-selecting will only push users as they leave or enter the audience.

- (optional) Select the One-time Export checkbox, to make this a one-time export that will complete once the current audience is exported.

- (optional) Maximum File Size Input the maximum size of each exported file in bytes. If left blank, the default is 5368709120 (5GB).

- Click the Start job button to start the job

Export Audiences (Blob Storage) CSV

Export your Lytics audiences to Azure Blob Storage as CSV files, which can then be imported into other systems for improved segmentation and targeting.

Integration Details

- Implementation Type: Server-side Integration

- Type: REST API Integration

- Frequency: Batch Integration one-time or continuous update.

- Resulting Data: User Profile data written to a CSV file.

This integration uses the Azure Blob Service REST API to upload data to Azure Blob Storage. Each job run will proceed as follows:

- Create Blob in the Container selected during configuration.

- Scan through the users in the selected audience. Filter user fields based on the export configuration and write to the created Blob.

- If the job is configured to be continuously updated, any users that enter the audience will be exported. Select the One-time Export checkbox if you want to run it only once.

Fields

The fields exported to the Blob will depend on the User Fields option in the job configuration described below. Any user field in your Lytics account may be available for export.

Configuration

Follow these steps to set up and configure an export job for Microsoft Azure in the Lytics platform. If you are new to creating jobs in Lytics, see the Destinations documentation for more information.

- Select Microsoft Azure from the list of providers.

- Select the export job type from the list.

- Select the Authorization you would like to use or create a new one.

- Enter a Label to identify this job you are creating in Lytics.

- (Optional) Enter a Description for further context on your job.

- Select the audience to export.

- Complete the configuration steps for your job.

- From the Container input, select the Azure container that will hold the Blob.

- (optional) From the User Fields input, select the user fields to export.

- (optional) In the File Prefix text box, enter a prefix for the exported Blob name.

- (optional) In the Delimiter text box, enter the delimiter for csv files.

- (optional) In the Join text box, enter the delimiter to use for array fields in csv files. Map fields will be url encoded.

- (optional) Select the Existing Users checkbox, to immediately push users who currently exist in the selected Lytics audience. De-selecting will only push users as they leave or enter the audience.

- (optional) Select the One-time Export checkbox, to make this a one-time export that will complete once the current audience is exported.

- Click the Start job button to start the job

Export Audiences (Event Hub)

The export job to Azure Event Hub allows you to send audience triggers when users enter or exit your Lytics audiences.

Integration Details

- Implementation Type: Server-side Integration.

- Type: REST API Integration.

- Frequency: Batch Integration continuous update.

- Resulting Data: User enter/exit event of Lytics audience sent to Event Hub.

This integration uses the Azure Event Hubs REST API to send enter/exit event of Lytics audiences to Event Hub. Each run of the job will proceed as follows:

- Scan through the users in the selected audience and send to the Event Hub.

- Keep the job active to export any users that enter or exit the audience.

Fields

Lytics sends enter/exit event information about the user to Event Hub. An event might get triggered due to the following:

- A new data event.

- User Profile scoring gets updated occasionally.

- A scheduled trigger evaluation.

A trigger of "has done X of Y in last 7 days" may get scheduled to be evaluated 7 days after last X event. When the user gets updated, audience membership is re-evaluated and a trigger is sent if the user has moved in or out of the audience. Below is an example of the message sent to Event Hub:

{

"data": {

"_created": "2016-06-29T18:50:16.902758229Z",

"_modified": "2017-03-18T06:12:36.829070108Z",

"email": "[email protected]",

"user_id": "user123",

"segment_events":[

{

"id": "d3d8f15855b6b067709577342fe72db9",

"event": "exit",

"enter": "2017-03-02T06:12:36.829070108Z",

"exit": "2017-03-18T06:12:36.829070108Z",

"slug": "demo_segment"

},

{

"id": "abc678asdf",

"event": "enter",

"enter": "2017-03-02T06:12:36.829070108Z",

"exit": "2099-03-18T06:12:36.829070108Z",

"slug": "another_segment"

}

]

},

"meta":{

"object":"user",

"subscription_id": "7e2b8804bbe162cd3f9c0c5991bf3078",

"timestamp": "2017-03-18T06:12:36.829070108Z"

}

}Configuration

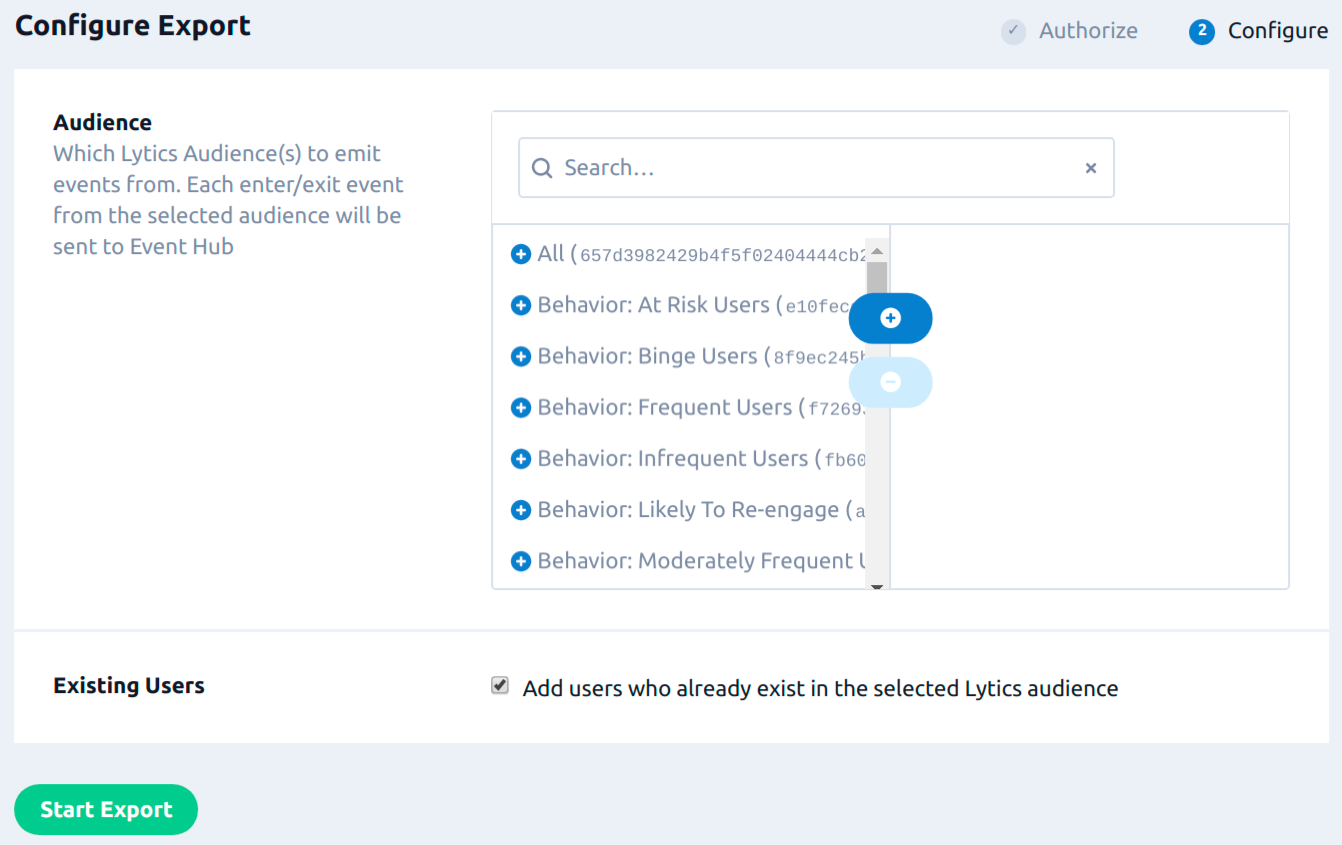

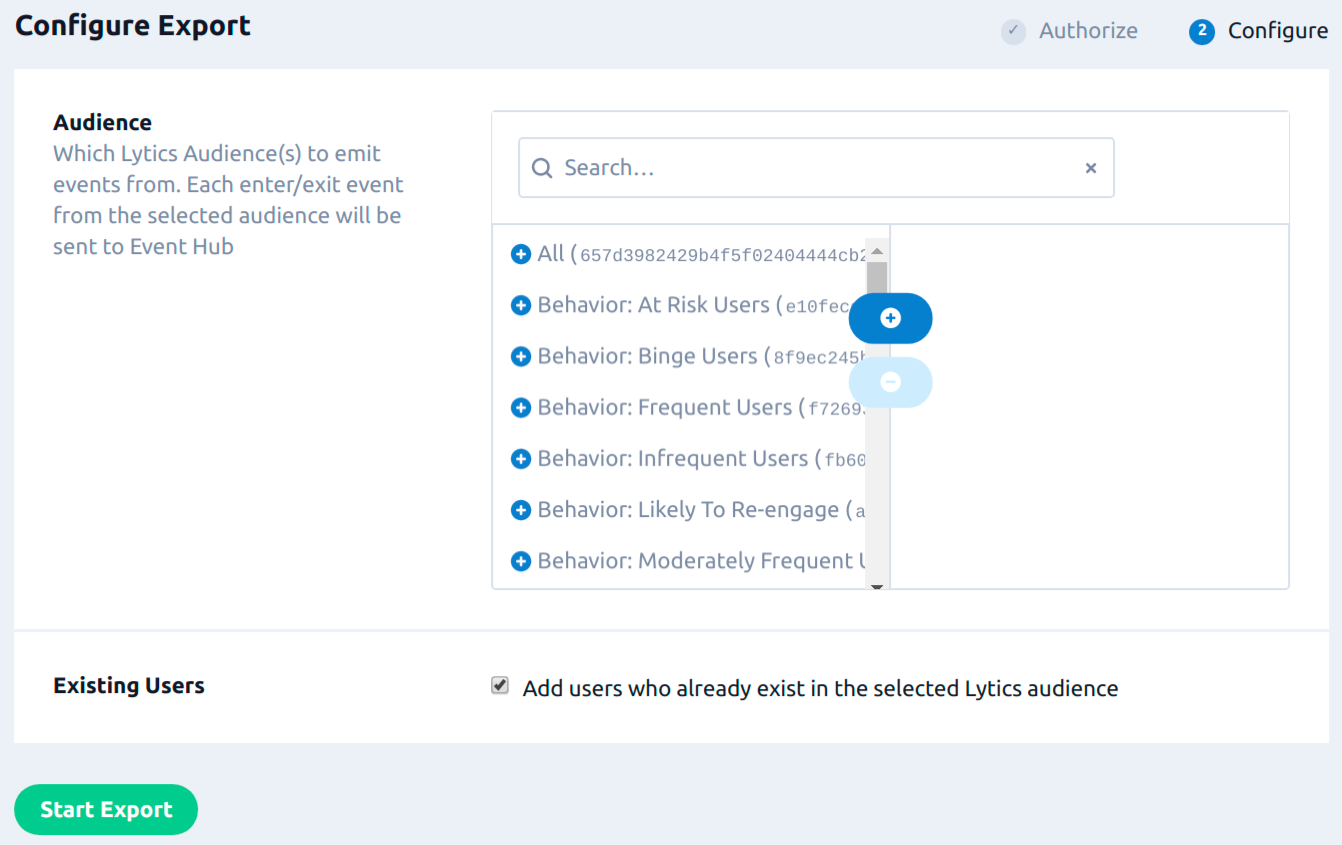

Follow these steps to set up and configure an export of user event to Azure Event Hub in the Lytics platform. If you are new to creating jobs in Lytics, see the Destinations documentation for more information.

- Select Microsoft Azure from the list of providers.

- Select the Export Audiences (Event Hub) job type from the list.

- Select the Authorization you would like to use or create a new one.

- Enter a Label to identify this job you are creating in Lytics.

- (Optional) Enter a Description for further context on your job.

- From the Audience list, select the Lytics audience you want to export. The event from the Audience list on the right side will be exported.

- (Optional) Select the Existing Users checkbox to enable a backfill of the current members of the audience(s) to Event Hub immediately.

- Click Start Export.

Export Events (Blob Storage)

Export events into Azure Blob Storage so you can access, archive, or run analysis on Lytics events in the Azure ecosystem.

Integration Details

- Implementation Type: Server-side Integration

- Implementation Technique: REST API Integration

- Frequency: One-time or scheduled Batch Integration which may be hourly, daily, weekly, or monthly depending on configuration.

- Resulting data: Raw Event Data exported to a CSV file format.

This integration uses the Azure Blob Service REST API to write CSVs to the configured container in Azure Blob Storage. Each run of the job will proceed as follows:

- Create a blob with the configured file name.

- Read events from the configured Lytics data streams. If the export is configured to run periodically, only the events since the last run of the export will be included.

- PGP encrypt the CSV if a PGP key is provided in the Azure authorization.

- Compress the CSV, if selected in the export's configuration.

- Write the event data to Azure Blob Storage by PUTing blocks of data.

Fields

The fields included depend on the raw event in Lytics data. All fields in the selected stream will be included in the exported CSV.

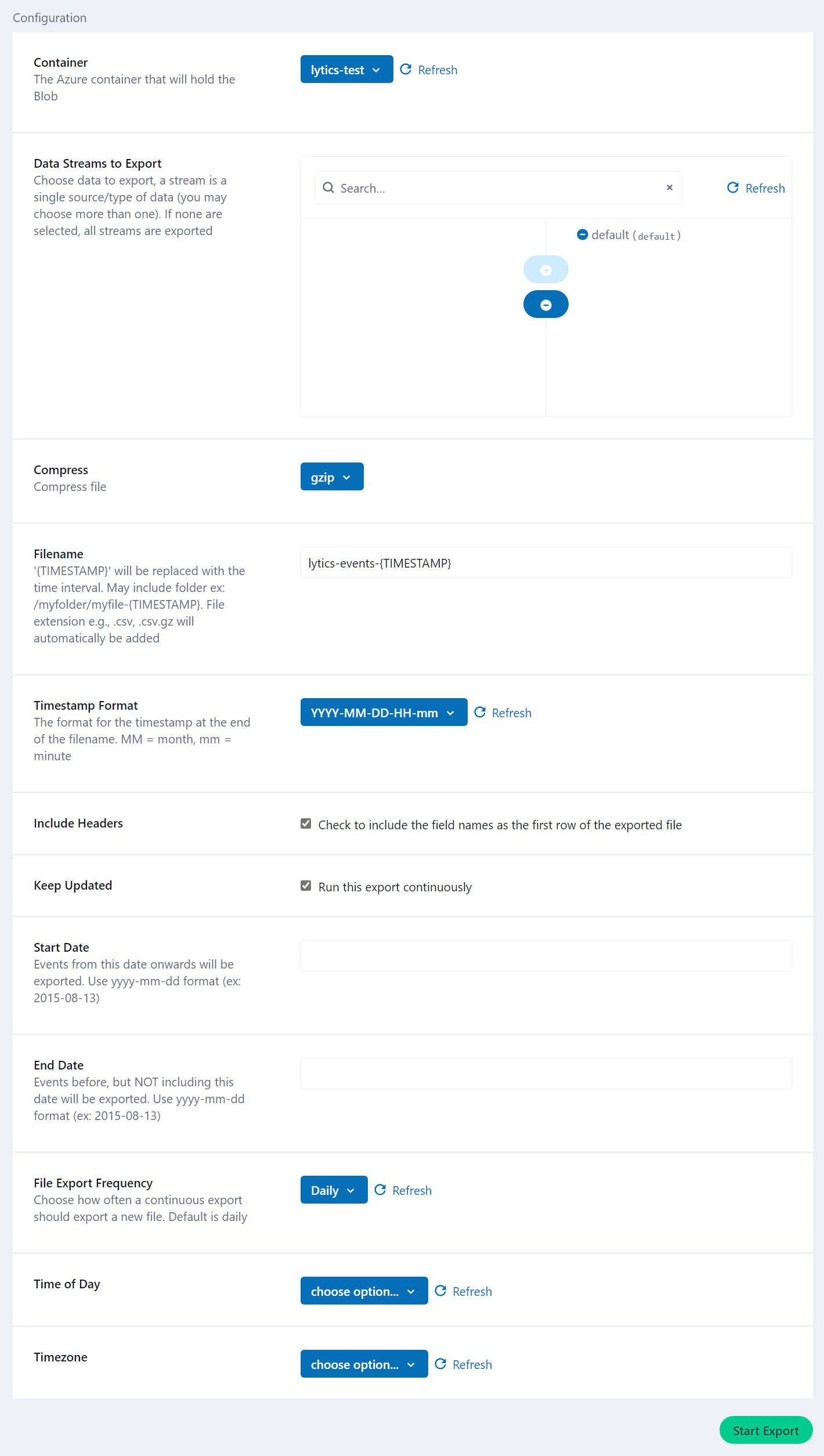

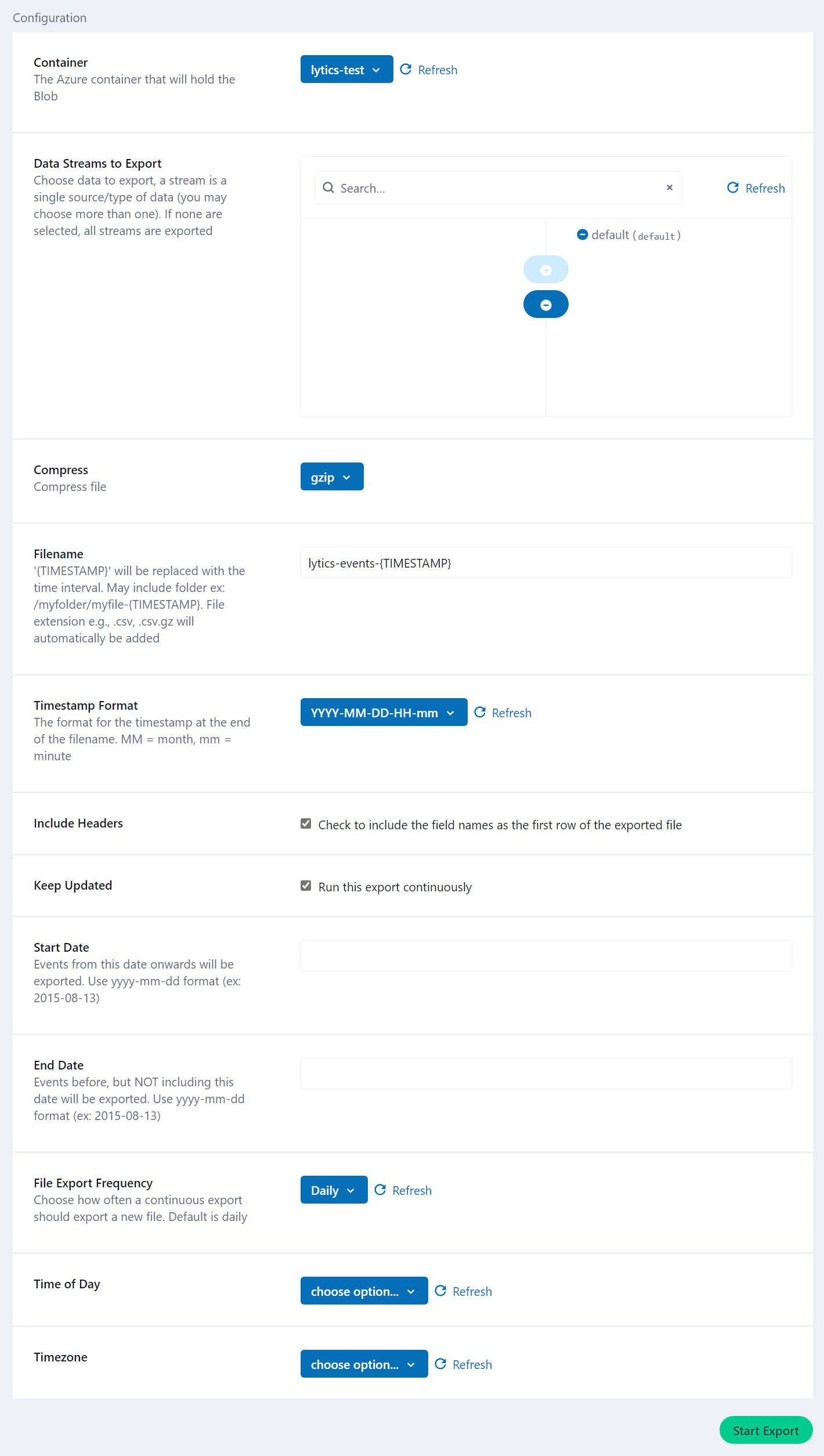

Configuration

Follow these steps to set up and configure an export job for Microsoft Azure in the Lytics platform. If you are new to creating jobs in Lytics, see the Destinations documentation for more information.

- Select Microsoft Azure from the list of providers.

- Select the Export Events (Blob Storage) from the list.

- Select the Authorization you would like to use or create a new one.

- Enter a Label to identify the job you are creating in Lytics.

- (Optional) Enter a Description for further context on your job.

- Complete the configuration steps for your job.

- From the Container input, select the Azure container that will hold the Blob.

- From the Data Streams to Export input, select the event data streams to export, a stream is a single source/type of data (you may choose more than one). If none are selected, all streams are exported.

- (optional) From the Compress input, select the compression type (gzip, zip, or none) of the file.

- In the Filename input, enter the naming convention for the files.

{TIMESTAMP}will be replaced with the current time of the export. The filename may include folder e.g.:/myfolder/myfile-{TIMESTAMP}. File extension e.g., .csv, .csv.gz will automatically be added. - (optional) From the Timestamp Format input, select the format for the {TIMESTAMP} in the filename. MM = month, mm = minute.

- (optional) Select the Include Headers checkbox to include the field names as the first row of the exported file.

- (optional) Select the Keep Updated checkbox to run this export continuously.

- (optional) In the Start Date text box, enter events from this date onwards will be exported. Use

yyyy-mm-ddformat (2015-08-13). - (optional) In the End Date text box, enter events before, but NOT including this date will be exported. Use

yyyy-mm-ddformat (2015-08-13). - (optional) From the File Export Frequency input, select how often a continuous export should export a new file. Default is daily.

- (optional) From the Time of Day input, select the time of day to run a Daily or Weekly export.

- (optional) From the Timezone input, select the timezone for the time of day specified above.

- Click Start Export.

Updated 5 months ago