Databricks

Overview

Databricks is a software platform that provides you a way to organize your data for the purpose of analytics and data science.

Integrating Lytics with Databricks allows you to import data from your Databricks database into Lytics and then activate to various advertising and marketing platforms.

Authorization

If you haven't already done so, you will need to set up a Databricks account before you begin the process described below.

Please see connection with databricks using go sql driver for details on how Lytics integrates with Databricks. You would need Host, HTTP Path and Personal Access Token in order to connect to Databricks. Please refer to Databrick's connection details for cluster or connection details for sql warehouse documentation to get host and HTTP path information. For personal access token, please refer to Databrick's generating personal access token documentation.

Please make sure your Databricks cluster is running before you begin the authorization process. It can take up to 5 minutes for the cluster to initiate.

Your Databricks cluster must be running to configure an authorization & connection and create Cloud Connect audiences in Lytics.

We recommend using SQL Warehouses (ideally Serverless SQL Warehouses).

If you are new to creating authorizations in Lytics, see the Authorizations documentation for more information.

- Select Databricks from the list of providers.

- Select the Databricks Database Authorization method for authorization.

- Enter a Label to identify your authorization.

- (Optional) Enter a Description for further context on your authorization.

- In the Host text box, enter Databricks server hostname.

- In the Port numeric text box, enter Databricks server port. Default port for Databricks cluster is 443.

- In the HTTP Path text box, enter Databricks compute resources URL.

- In the Token text box, enter your Databricks personal access token.

- Click Complete to save the authorization.

Import Table

Import user data directly from your Databricks database table into Lytics, resulting in new user profiles or updates to fields on existing profiles.

Integration Details

- Implementation Type: Server-side Integration.

- Implementation Technique: Databricks database connection.

- Frequency: One-time or scheduled Batch Integration can be hourly, daily, weekly, or monthly depending on configuration).

- Resulting data: Raw Events, User Profiles and User Fields.

This integration uses Databricks gosql driver to establish connection with Databricks database and imports data by querying the table selected during configuration. Once started, the job will:

- Creates a temporary view containing a snapshot of the database table to query against.

- Adds a column for consistent ordering of data.

- Only rows that have a timestamp after the last import, or Since Date will be included.

- Query a batch of rows from the temporary table.

- Emits rows to the data stream.

- Repeat steps 2 and 3 until the entire temporary view is read.

- Once all the rows are imported, if the job is configured to run continuously, it will sleep until the next run. The time between runs can be selected during configuration.

Fields

Fields imported through Databricks will require custom data mapping.

Configuration

Follow these steps to set up and configure a Databricks import table job in the Lytics platform. If you are new to creating jobs in Lytics, see the Jobs Dashboard documentation for more information.

-

Select Databricks from the list of providers.

-

Select the Import Table job type from the list.

-

Select the Authorization you would like to use or create a new one.

-

Enter a Label to identify this job you are creating in Lytics.

-

(Optional) Enter a Description for further context on your job.

-

Complete the configuration steps for your job.

Your Databricks cluster must be running to configure an authorization and a job in Lytics. It can take up to 5 minutes for the cluster to initiate.

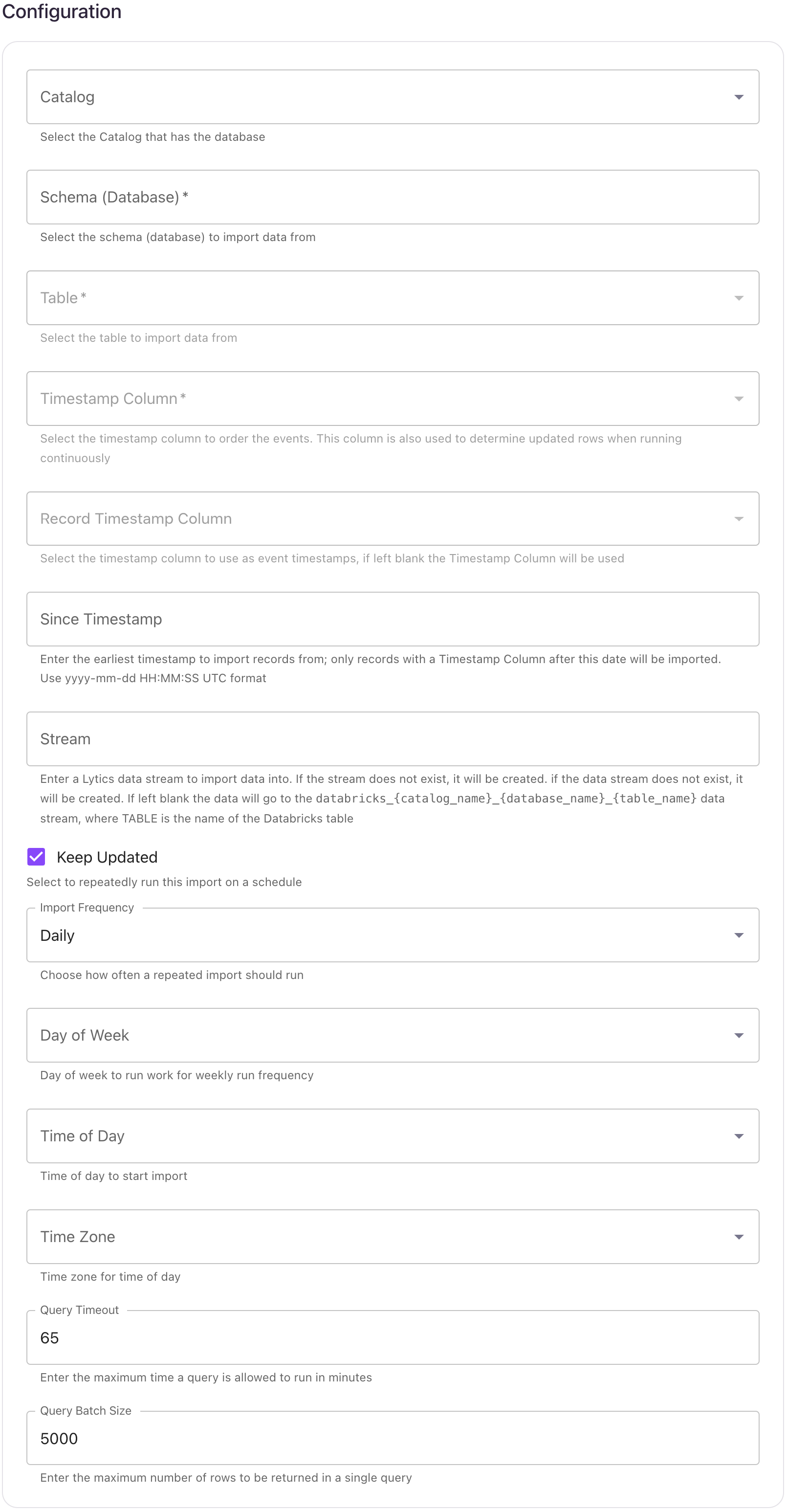

- Using the Catalog dropdown menu, select the Databricks catalog that has the database.

- Using the Database dropdown menu, select the database you would like to import data from.

- Using the Table dropdown menu, select the table you want to import data from.

- From the Timestamp Column input, select the timestamp column to order the events. This column is also used to determine updated rows when running continuously.

- (Optional) From the Record Timestamp Column input, select the timestamp column to use as event timestamps, if left blank the Timestamp Column will be used.

- (Optional) In the Since Timestamp text box, enter the earliest timestamp to import records from; only records with a Timestamp Column after this date will be imported. Use

yyyy-mm-ddHH:MM:SSUTC format. - (Optional) From the Stream input, select or type the data stream name you want to add data to. If the data stream does not exist, it will be created. If left blank the data will go to the

databricks_{catalog_name}_{database_name}_{table_name}stream. - (Optional) Select the Keep Updated checkbox to repeatedly run this import on a schedule.

- (Optional) From the Import Frequency input, choose how often a repeated import should run.

- (Optional) From the Time of Day input, select time of day to start import.

- (Optional) From the Timezone input, select timezone for time of day.

- (Optional) In the Query Timeout numeric field, enter the maximum time a query is allowed to run in minutes.

- (Optional) In the Query Batch Size numeric field, enter the maximum number of rows to be returned in a single query.

- Click Start Import.

Export Audience

Export user profile data from Lytics to a Databricks table and leverage Databricks tools to organize, transform and analyze the data as well as to generate reports to assist in improving campaign performance.

Integration Details

- Implementation Type: Server-side Integration.

- Implementation Technique: File Based Transfer Integration and Databricks database connection.

- Frequency: Batch Integration every 24 hours. Each batch contains the entire audience, and replaces the previous table.

- Resulting data: User Fields will be exported to Databricks as rows in a table.

This integration connects to your Databricks using gosql driver. It uses databricks Copy INTO command to load data from Lytics. Once started, the job will:

- Create a placeholder table in Databricks with no schema. The name of the table follows

lytics_user_{audience_slug}format. - Scan the audience for export, and writes the user data in CSV format to the Lytics-managed AWS S3 bucket.

- Load the data from Lytics AWS bucket to the Databricks table created above via COPY INTO command.

- If the export is configured to run continuously, the workflow will go to sleep for configured period of time before repeating steps 1 through 3.

Everytime the job runs, it will drop and create the placeholder table. The schema of the table is later inferred by Databricks during COPY INTO command.

Fields

If fields are selected during job configuration, only those fields will be included in the resulting Databricks table. If none is selected then all the user fields will be exported. Non-scalar fields will be exported as JSON string.

Configuration

Follow these steps to set up and configure a Databricks export job in the Lytics platform. If you are new to creating jobs in Lytics, see the Jobs Dashboard documentation for more information.

-

Select Databricks from the list of providers.

-

Select the Export Audience job type from the list.

-

Select the Authorization you would like to use or create a new one.

-

Enter a Label to identify this job you are creating in Lytics.

-

(Optional) Enter a Description for further context on your job.

-

Complete the configuration steps for your job.

-

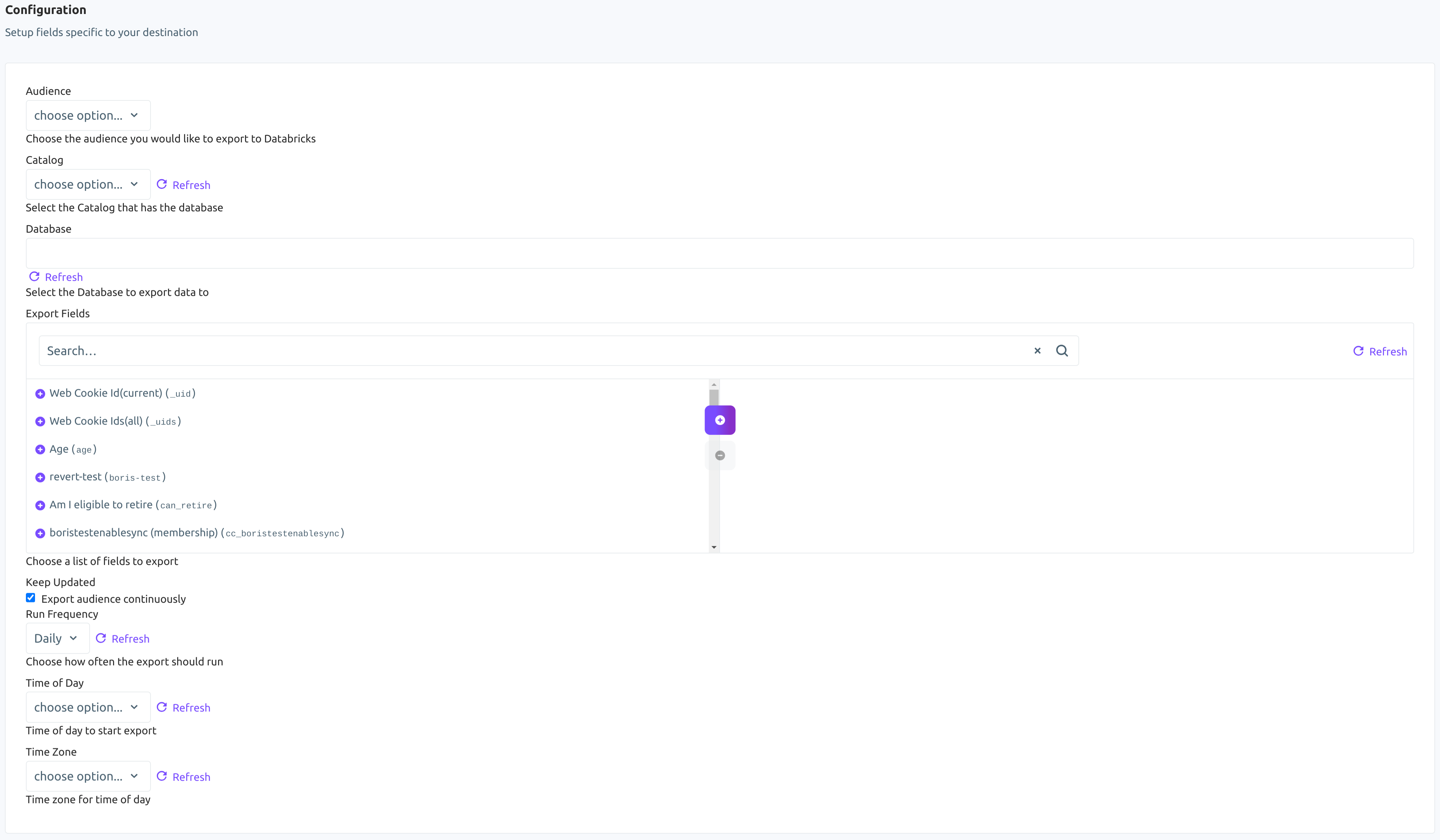

Using the Audience dropdown menu, select the Lytics audience you would like to export.

-

Using the Catalog dropdown menu, select the Databricks catalog that has the database.

-

Using the Database dropdown menu, select the Databricks database you would like to export data to.

-

From the Export Fields input, choose a list of Lytics user profile fields to export. Each field will be exported to the table as an individual column. If none are selected, then all the fields will be exported.

-

(Optional) Select the Keep Updated checkbox to export the audience continuously.

-

(Optional) From the Run Frequency imput, select how often to export the users.

-

(Optional) From the Time of Day input, select the time of day to start continuous exports.

-

(Optional) From the Timezone input, select a timezone for time of day.

-

Click Start Export.

Updated 6 months ago