Snowflake

Overview

Snowflake is a cloud-based data platform that allows for easy and reliable access to your data. Integrating Lytics with Snowflake allows you to seamlessly import your Snowflake data into Lytics to leverage Lytics' segmenting and insights capabilities. Lytics audiences can also be exported in bulk to Snowflake for auditing, querying, and reporting.

Authorization

Snowflake authorization updateSnowflake will block single-factor password auth in Nov 2025 https://www.snowflake.com/en/blog/blocking-single-factor-password-authentification/. In order to comply, Lytics now takes key-pair login credentials instead of password.

In order for continued use of the Snowflake workflows, new authorizations will need to be created, and works updated with the new authorization before Nov 2025.

If you haven't already done so, you will need to set up a Snowflake account before you begin the process described below. For imports, you will need to provide the credentials and role for a user who has access to the data you wish to import. For exports, you will need to provide the credentials and role for a user who has access to the database and schema you wish to export to.

You will need to create a user in Snowflake with Type=SERVICE for the Lytics connection and create a new private/public key pair as described here: https://docs.snowflake.com/en/user-guide/key-pair-auth.

Lytics only supports unencrypted private keys.

For this reason, once the private key is created, it should only be used in setting up the Lytics authorization, and should not be saved anywhere else.

If you are new to creating authorizations in Lytics, see the Authorizations documentation for more information.

- Select Snowflake from the list of providers.

- Select the method for authorization. Note that different methods may support different job types. Snowflake supports the following authorization methods:

- Enter a Label to identify your authorization.

- (Optional) Enter a Description for further context on your authorization.

- Complete the configuration steps needed for your authorization. These steps will vary by method.

- Click Save Authorization.

Snowflake Direct Authorization

-

Enter the Snowflake Account that contains the data you want to import. See Snowflake's Account Identifier documentation for details on account name format.

-

Enter the Snowflake Warehouse you will run the extraction queries against. For more information, see Snowflake's Warehouse documentation.

-

Enter the Snowflake Database that contains the data you want to import.

-

Enter the Snowflake Username of a Snowflake user who has access to the data you want to import.

-

Enter your Snowflake Private Key for the Snowflake user.

-

Enter the Snowflake Role you will run extraction queries with. Make sure this role has access to the data you want to import.

For import, the role will need-

usageon the database -

usageon the schema -

operateandusageon the warehouseFor more information, see Snowflake's Roles documentation.

-

-

Enter your user's Snowflake Password.

Snowflake Direct Authorization for Bulk Export

-

Enter your Snowflake Account account locator, ex.

xy12345.us-east-aws. Be sure to format the locator according to your cloud platform and region, as described here: https://docs.snowflake.com/en/user-guide/admin-account-identifier.html#locator-formats-by-cloud-platform-and-region -

Enter the Snowflake Warehouse you will run the load queries against. For more information, see Snowflake's Warehouse documentation.

-

Enter the Snowflake Database you wish to export to.

-

Enter the Username of a Snowflake user who has access to the schema you wish to export to.

-

Enter the Snowflake Role you will run load queries with. Make sure this role has write permissions on the database and schema you wish to export to.

For export, the role will need-

usageon the database -

usageandcreate tableon the schema -

operateandusageon the warehouseFor more information, see Snowflake's Roles documentation.

-

-

Enter your Snowflake Private Key for the Snowflake user.

-

In the GCS Stage Service Account text box, enter your GCS Stage Service Account. To set up a GCS Stage Service Account please see Required Snowflake Setup below, this is a one time set up process.

-

Optionally, enter the Storage Integration Name to use when connecting. If this is not set

GCS_INT_LYTICSwill be used.

Import Data

Import data from a Snowflake table or view into Lytics, resulting in new user profiles or updates to fields on existing profiles.

Integration Details

- Implementation Type: Server-side Integration

- Implementation Technique: REST API Integration

- Frequency: Batch Integration

- Resulting Data: User Profiles and User Fields and Raw Event Data

This integration ingests data from your Snowflake account by directly querying the table or view selected during configuration. Once started, the job will:

-

Run a query to select and order the rows that have yet to be imported. If the import is continuous, the job will save the timestamp of the last row seen, only the most recent data will be imported during future importa.

-

Once the query completes, the result set will be imported in batches, starting from the oldest row.

-

Once the last row is imported, if the job is configured to run continuously, it will sleep until the next run. The time between runs can be selected during configuration.

Fields

Fields imported through Snowflake will require custom data mapping. For assistance mapping your custom data to Lytics user fields, please contact Lytics Support.

Configuration

Follow these steps to set up and configure an import of Snowflake data in the Lytics platform. If you are new to creating jobs in Lytics, see the Data Sources documentation for more information.

-

Select Snowflake from the list of providers.

-

Select the Import Audiences and Activity Data job type from the list.

-

Select the Authorization you would like to use or create a new one.

-

Enter a Label to identify this job you are creating in Lytics.

-

(Optional) Enter a Description for further context on your job.

-

Using the Database dropdown menu, select the database you would like to import data from.

-

Using the Source Type dropdown menu, select whether to import from a Snowflake table or view.

-

Using the Source dropdown menu, select the table or view you want to import.

-

In the Modified Timestamp Field text input, enter the name of the field containing the event timestamp. On continuous imports, the most recent time in this field will be saved. On the next import, only rows with a timestamp later than that value will be imported.

-

Enter name of the Lytics Stream where the data will be imported.

-

(optional) Select the Keep Updated checkbox to import table or view continuously.

-

(optional) From the Frequency input, select the frequency at which to run the import.

-

(Optional) Toggle Show Advanced Options.

-

(optional) From the Event Timestamp Field input, select enter a timestamp field to use as the event timestamp in Lytics. If none is entered, Modified Timestamp Field will be used.

-

(optional) In the Events Since text box, enter enter the earliest date from which to import events. Only events that occurred after this date will be imported. RFC3339 formatted (i.e.

YYYY-MM-DDThh:mm:ss+00:00). -

Click Complete to start the import.

Bulk Audience Export

Export user profiles including user fields and audience memberships from Lytics to Snowflake.

Integration Details

- Implementation Type: Server-side Integration

- Implementation Technique: File Based Transfer Integration

- Frequency: Batch Integration every 24 hours. Each batch contains the entire audience, and replaces the previous table.

- Resulting data: User Fields will be exported to Snowflake as rows in a Snowflake table.

The resulting Snowflake schema is determined automatically by the workflow and will consist of the following Snowflake types according to the field's type in Lytics:

- BOOLEAN

- VARCHAR(16777216)

- NUMBER(38,0)

- FLOAT

- TIMESTAMP_TZ(9)

- VARIANT (used for non-scalar fields)

When a job is started, the workflow will:

- Create a temporary table in Snowflake with the appropriate schema of the form

USERS_{audience_slug}_{unix_timestamp}. - Scan the audience for export, and load in CSV format to the Lytics-managed GCS storage integration for your account (see setup instructions below).

- Load the data from GCS to your Snowflake account via COPY INTO.

- Once the load is complete, the temporary table will be renamed to a permanent table of the form

USERS_{audience_slug}. NOTE: if a table of this name already exists in the target Snowflake schema with an identical schema to the temporary table, it will be dropped and replaced. If the schemas differ, the permanent table name will include an incremental suffix i.e.USERS_{audience_slug}1. - If the export is configured to run continuously, the workflow will sleep for 24 hours before repeating steps 1 through 4.

Fields

If fields are selected during job configuration, only those fields will be included in the resulting Snowflake table. If no fields are selected, all fields on the profile will be exported.

Required Snowflake Setup

Exporting to Snowflake requires additional configuration in your Snowflake account. You will need to create a storage integration in your Snowflake account that references a Lytics-owned GCS bucket. You will then need to retrieve the GCS service account associated with your storage integration in order to authorize in Lytics. More information about creating GCS storage integrations can be found here. Note that step 3 in the Snowflake docs linked is not necessary, as the GCS bucket is owned and managed by Lytics.

In your Snowflake account, run the following queries to set up a storage integration for Lytics. Note: you will need ACCOUNTADMIN permissions to run the following queries.

First, create your storage integration:

create storage integration GCS_INT_LYTICS

type = external_stage

storage_provider = gcs

enabled = true

storage_allowed_locations = ('gcs://aid-{your-aid}-snowflake-exports-lyticsio/')Note that you will need to replace {your-aid} with the four digit AID for your Lytics account in the query above. If you don't know your AID, contact your account manager for assistance. You can also find the AID in the URL bar when signed into the account you are setting up. For example, in http://app.lytics.com/dashboard?aid=xxxx "xxxx" would be the AID for the account.

Second, retrieve your storage integration service account, you will need this to authorize the workflow:

desc storage integration GCS_INT_LYTICSFinally, you will need to grant the role you authorized with permissions to use the newly created storage integration:

grant usage on integration GCS_INT_LYTICS to role {your-role}Configuration

Follow these steps to set up and configure an export job for Snowflake in the Lytics platform. If you are new to creating jobs in Lytics, see the Destinations documentation for more information.

-

Select Snowflake from the list of providers.

-

Select the Bulk Export job type from the list.

-

Select the authorization you would like to use or create a new one.

-

Enter a Label to identify this job you are creating in Lytics.

-

(Optional) Enter a Description for further context on your job.

-

Select the audience to export.

-

Using the Database dropdown menu, select the database you would like to import data from.

-

From the Schema input, select the name of the schema to export to.

-

(Optional) From the Export Fields input, choose a list of fields to export. If none are selected, all fields on the profile will be included.

-

(Optional) Select the Keep Updated checkbox to export the audience continuously.

-

(Optional) From the Time of Day input, select the time of day to start continuous exports.

-

(Optional) From the Timezone input, select a timezone for time of day.

-

Click Complete to start the export.

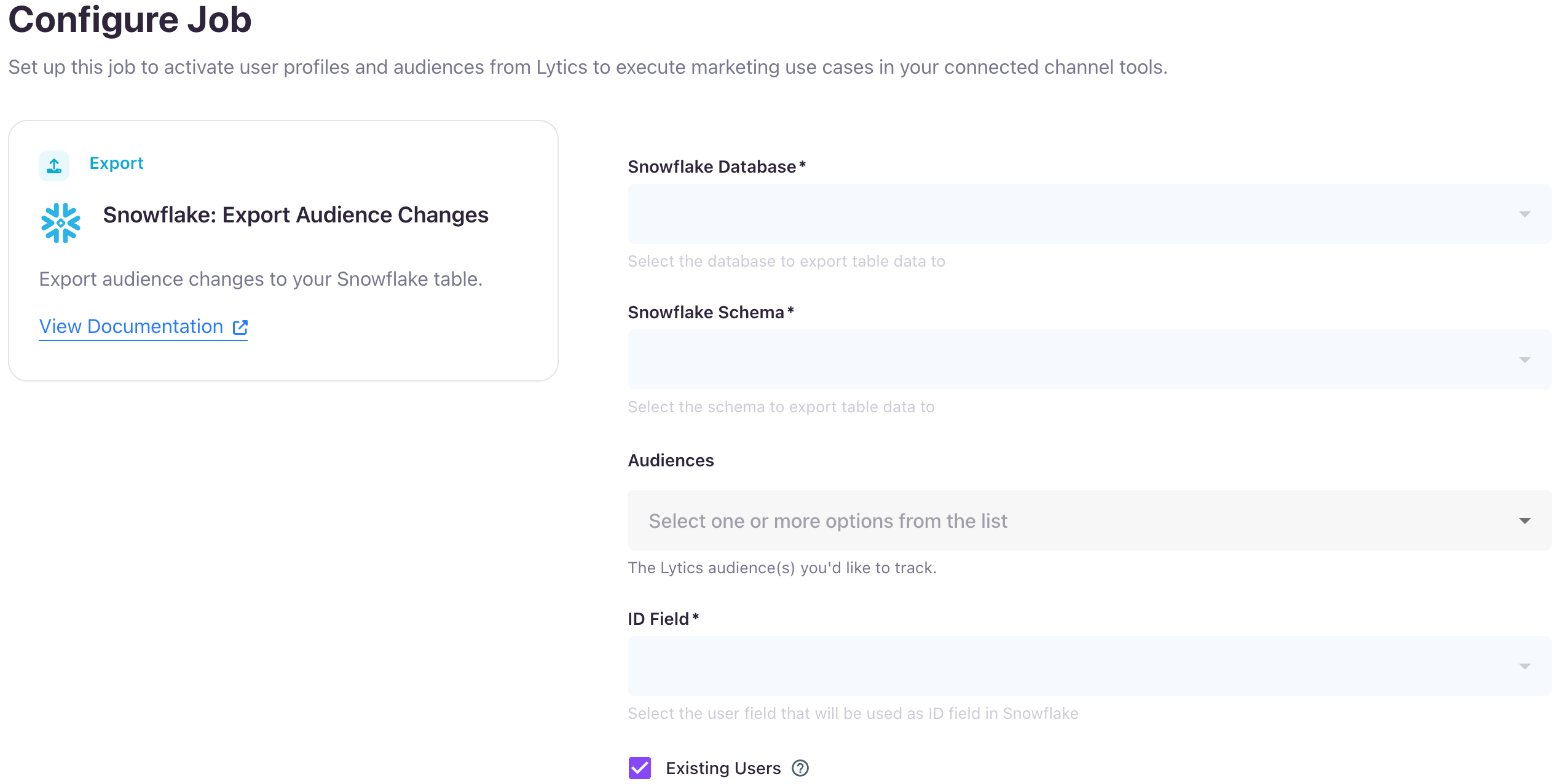

Export Audience Changes

Export audience membership change events from Lytics to Snowflake, tracking when users entered or exited the selected audience segments.

Integration Details

- Implementation Type: Server-side Integration

- Implementation Technique: Audience Trigger Integration File Based Transfer Integration

- Frequency: Near Real-time Integration with an optional one-time Backfill of the audience after setup.

- Resulting data: Audience membership change events exported to Snowflake as rows in a table

The job monitors selected Lytics audiences for membership changes. Whenever the users enter/exit the audience, it will send the information to the Snowflake table.

When the job is started, it will:

- Create a Snowflake table with name

LYTICS_SEG_CHANGESwith four columns (IDField, segment_slug, timestamp, exit). - Run a one-time backfill (if configured to do so).

- Load the enter/exit data into the file in JSON format to the Lytics-managed GCS storage integration for your account (see setup instructions above, required step)

- Once the file is ready, it loads the data in the temporary table first using the COPY INTO command and then uses MERGE command to upsert the records to the actual table. The file is sent in a batch of 100,000 events or every 5 minutes, whichever occurs first.

- Receive real-time updates when a user enters or exits the selected audience(s) and repeats the step 3 and 4.

Fields

The exported events will include the following fields in your Snowflake table:

| Field | Snowflake Type | Description |

|---|---|---|

| IDFIELD | VARCHAR(16777216) | The selected ID field value for the user |

| SEGMENT_SLUG | VARCHAR(16777216) | Lytics audience slug |

| TIMESTAMP | TIMESTAMP_TZ(9) | When the membership change occurred |

| EXIT | BOOLEAN | Whether this event is exit or enter. |

Configuration

Follow these steps to set up and configure an export of audience change events to Snowflake in the Lytics platform. If you are new to creating jobs in Lytics, see the Destinations documentation for more information.

-

Select Snowflake from the list of providers.

-

Select the Export Audience Changes job type from the list.

-

Select the Authorization you would like to use or create a new one.

-

Enter a Label to identify this job you are creating in Lytics.

-

(Optional) Enter a Description for further context on your job.

-

Using the Snowflake Database dropdown menu, select the Snowflake database you would like to export event data to.

-

From the Snowlfake Schema input, select the name of the Snowflake schema to export events to.

-

From the Audiences input, select up to 10 audiences you would like to track for membership changes.

-

From the ID Field dropdown menu, select the user field that will be used as the unique identifier in Snowflake.

-

(Optional) Select the Existing Users checkbox to export existing users as an enter event.

-

Click Complete to start the export.

Updated 5 months ago